Evaluating graph layout algorithms: a systematic review of methods and best practices

Abstract

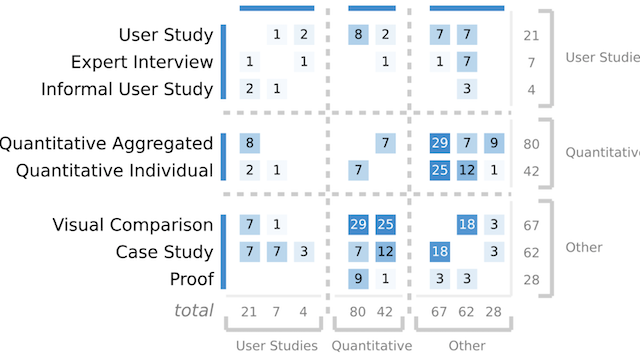

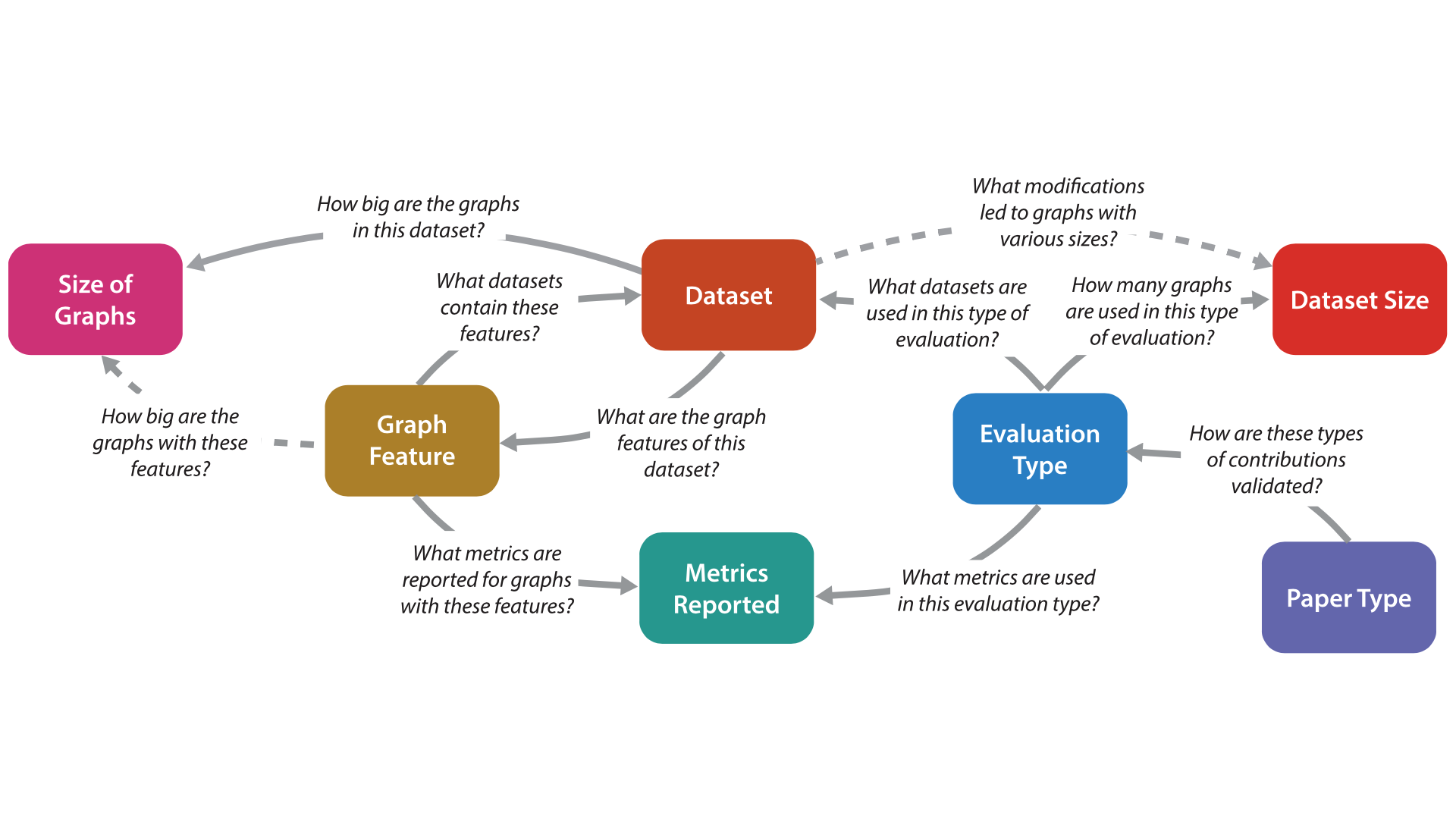

Evaluations—encompassing computational evaluations, benchmarks and user studies—are essential tools for validating the performance and applicability of graph and network layout algorithms (also known as graph drawing). These evaluations not only offer significant insights into an algorithm's performance and capabilities, but also assist the reader in determining if the algorithm is suitable for a specific purpose, such as handling graphs with a high volume of nodes or dense graphs. Unfortunately, there is no standard approach for evaluating layout algorithms. Prior work holds a 'Wild West' of diverse benchmark datasets and data characteristics, as well as varied evaluation metrics and ways to report results. It is often difficult to compare layout algorithms without first implementing them and then running your own evaluation. In this systematic review, we delve into the myriad of methodologies employed to conduct evaluations—the utilized techniques, reported outcomes and the pros and cons of choosing one approach over another. Our examination extends beyond computational evaluations, encompassing user- centric evaluations, thus presenting a comprehensive understanding of algorithm validation. This systematic review—and its accompanying website—guides readers through evaluation types, the types of results reported, and the available benchmark datasets and their data characteristics. Our objective is to provide a valuable resource for readers to understand and effectively apply various evaluation methods for graph layout algorithms. A free copy of this paper and all supplemental material is available at osf.io, and the categorized papers are accessible on our website at https://visdunneright.github.io/gd-comp-eval.

Citation

Evaluating graph layout algorithms: a systematic review of methods and best practices

Sara Di Bartolomeo, Tarik Crnovrsanin, David Saffo, Eduardo Puerta, Connor Wilson, and Cody Dunne. Computer Graphics Forum—CGF. 2024. DOI: doi.org/10.1111/cgf.15073

PDF | Preprint | DOI | Supplement | Code | Homepage | BibTeX

Khoury Vis Lab — Northeastern University

* West Village H, Room 302, 440 Huntington Ave, Boston, MA 02115, USA

* 100 Fore Street, Portland, ME 04101, USA

* Carnegie Hall, 201, 5000 MacArthur Blvd, Oakland, CA 94613, USA